Kubernetes is orchestraton layer of various Docker and/or containerization manager. Let see in this artcile how to setup a Kubernetes cluster or in short K8s cluster.

Steps involed in setting up Kubernetes Cluster

- Create VMs which are part of K8s cluster (master and worker nodes).

- Disable SELinux and SWAP on all nodes.

- Install kubeadm, kubelet, kubectl and docker in all nodes.

- start and enable docker and kubelet in all nodes.

- Initialize the master node.

- Configure Pod network in master node.

- Join all worker nodes in cluster.

- Verify the congigurations

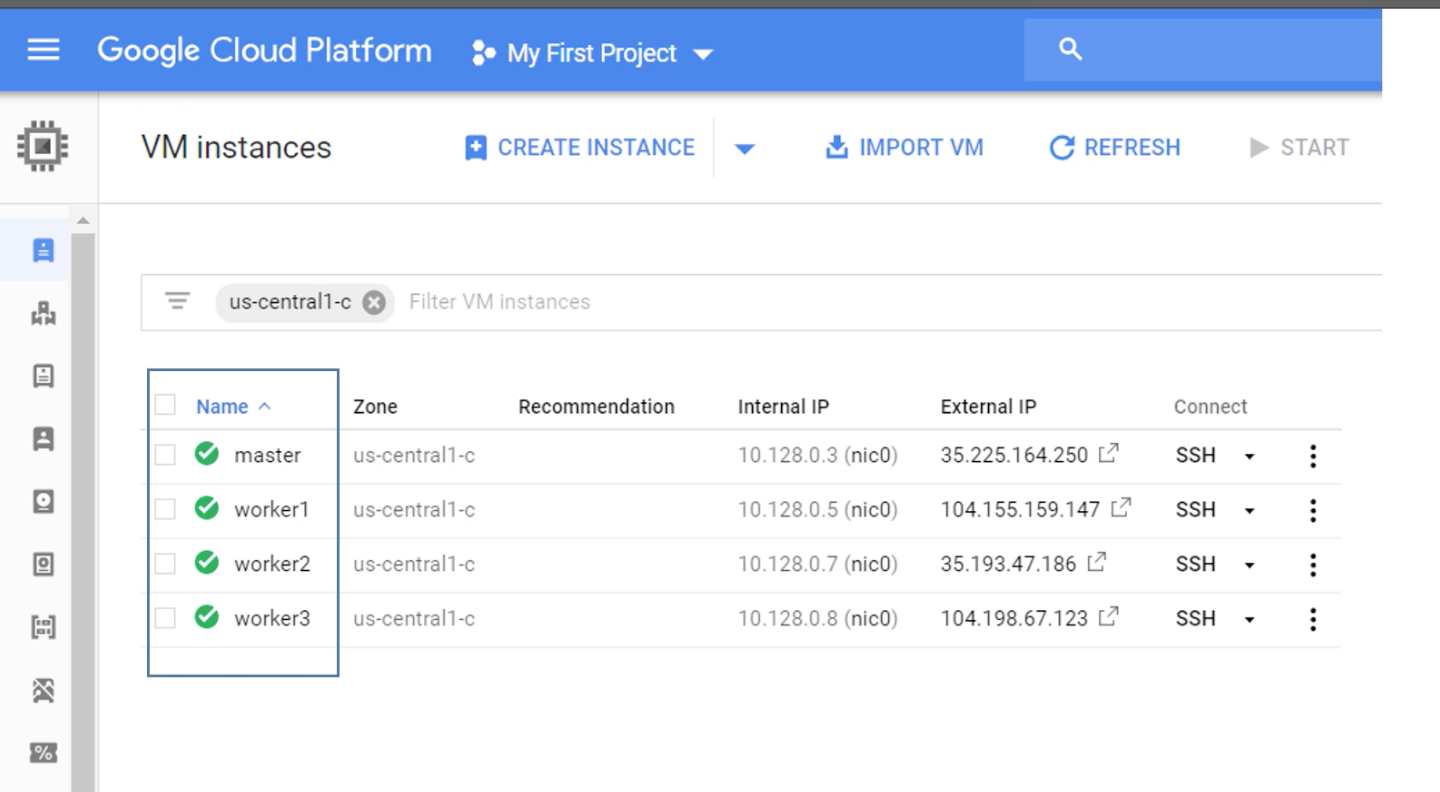

Create VMs

In this example I show four VMs (1 Master and 3 Worker nodes) created in Google Cloud Platform.

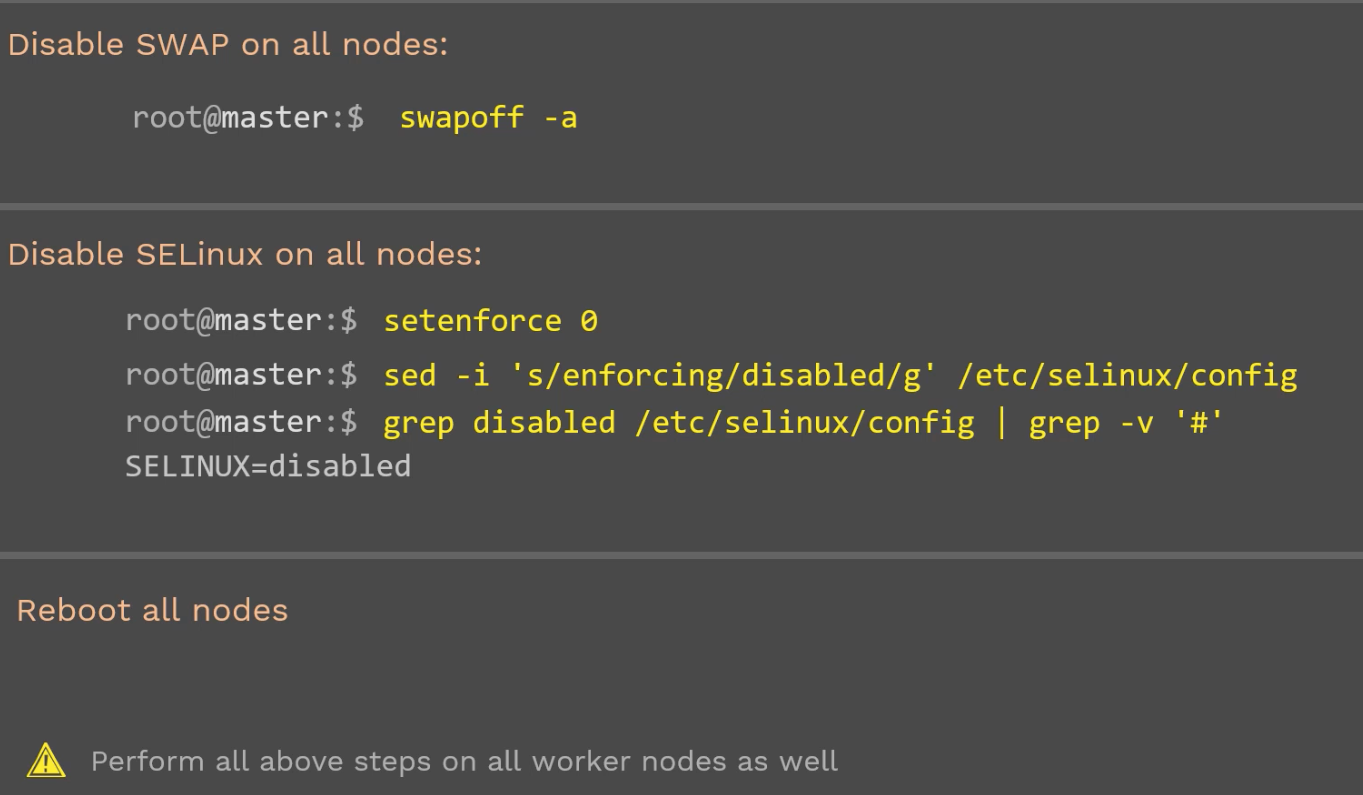

Disable SELinux and SWAP in all nodes

Disable SWAP in all nodes:

root@master1:$ swapoff -a

Repeat the above step in all nodes.

Disable SELinux in all nodes:

root@master1:$ setenforce 0

root@master1:$ sed -i 's/enforcing/disabled/g' /etc/selinux/config

root@master1:$ grep disabled /etc/selinux/config | grep -v '#'

SELINUX=disabled

Repeat the above step in all nodes.

Reboot all nodes

Install kubeadm, kubelet, kubectl and docker

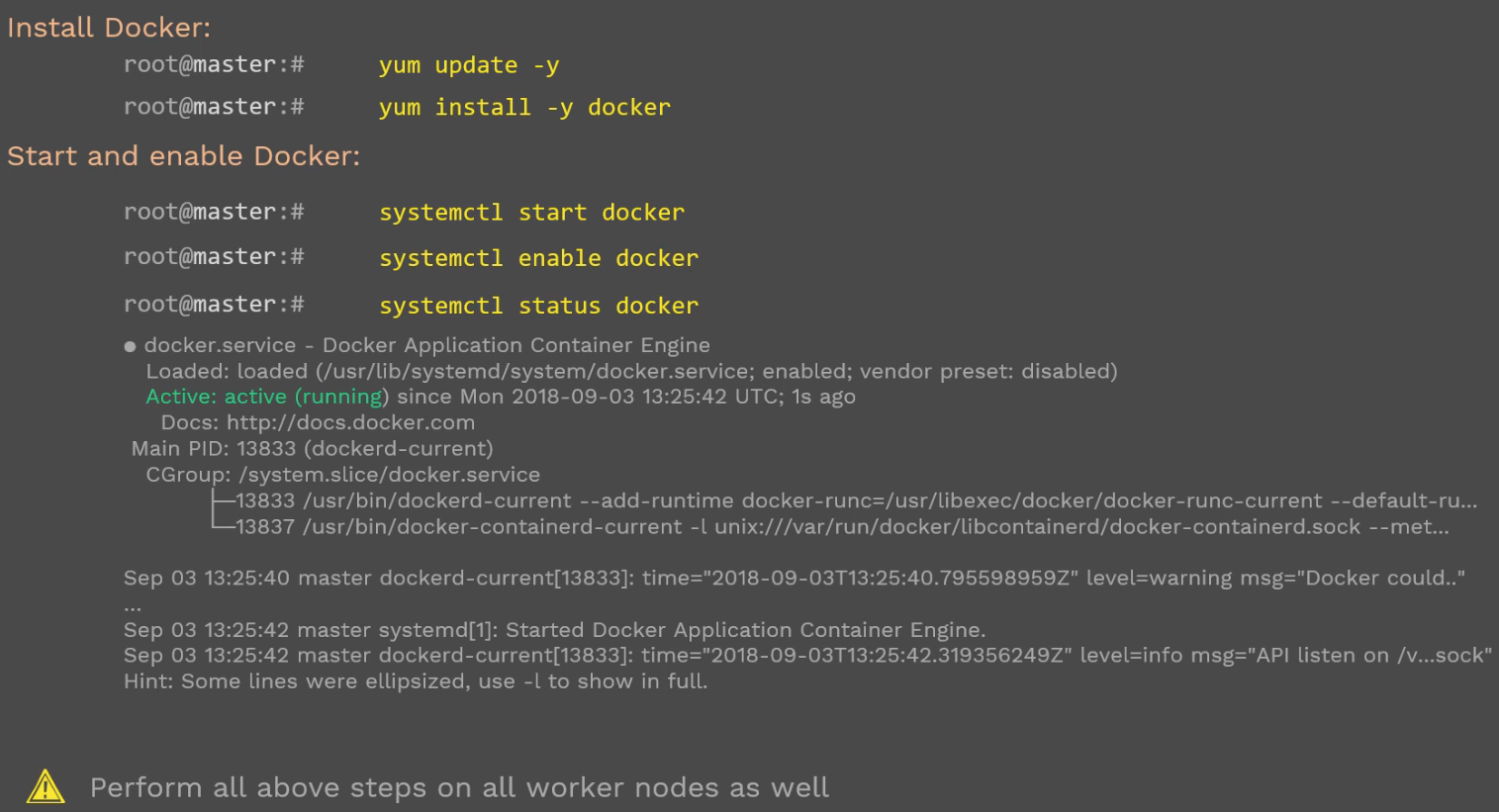

- Docker Installation

Install Docker:

root@master:$ yum install -y

root@master:$ yum install -y docker

Start and enable docker:

root@master1:$ systemctl start docker

root@master1:$ systemctl enable docker

Repeat the above step in all worker nodes as well. Docker runnning status in all nodes (master and worker) can be verified by root@master1:$ systemctl status docker

- Install kubeadm, kubectl and kubelet

Add kubernetes repo:

root@master1:$ cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-e17-x86_64

enabled=1

gpgcheck=1

repo_gpgchek=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg

https://packages.cloud.google.com/yum/doc/rpm-packages-key.gpg

exclude=kube*

EOF

Install kublet, kubeadm, kubectl and start docker:

root@master1:$ yum install -y kubeadm kubelet kubectl --disableexludes=kubernetes

root@master1:$ systemctl enable kubelet && systemctl start kubelet

Repeat the above step in all worker nodes as well. Kubectl and Kubelet runnning status in all nodes (master and worker) can be verified by

root@master1:$ systemctl status kubectl

root@master1:$ systemctl status kubelet

If you are in RHEL or Centos7, perform the below steps as well

root@master1:$ cat <<EOF > /etc/sysctl.d/k8s.conf

net.brige.bridge-nf-call-ip6tables=1

net.brige.bridge-nf-call-iptables=1

EOF

root@master1:$ sysctl --system

Initialize the master node

only on master node:

root@master1: $ kubeadm init --pod-network-cidr=10.240.0.0/16

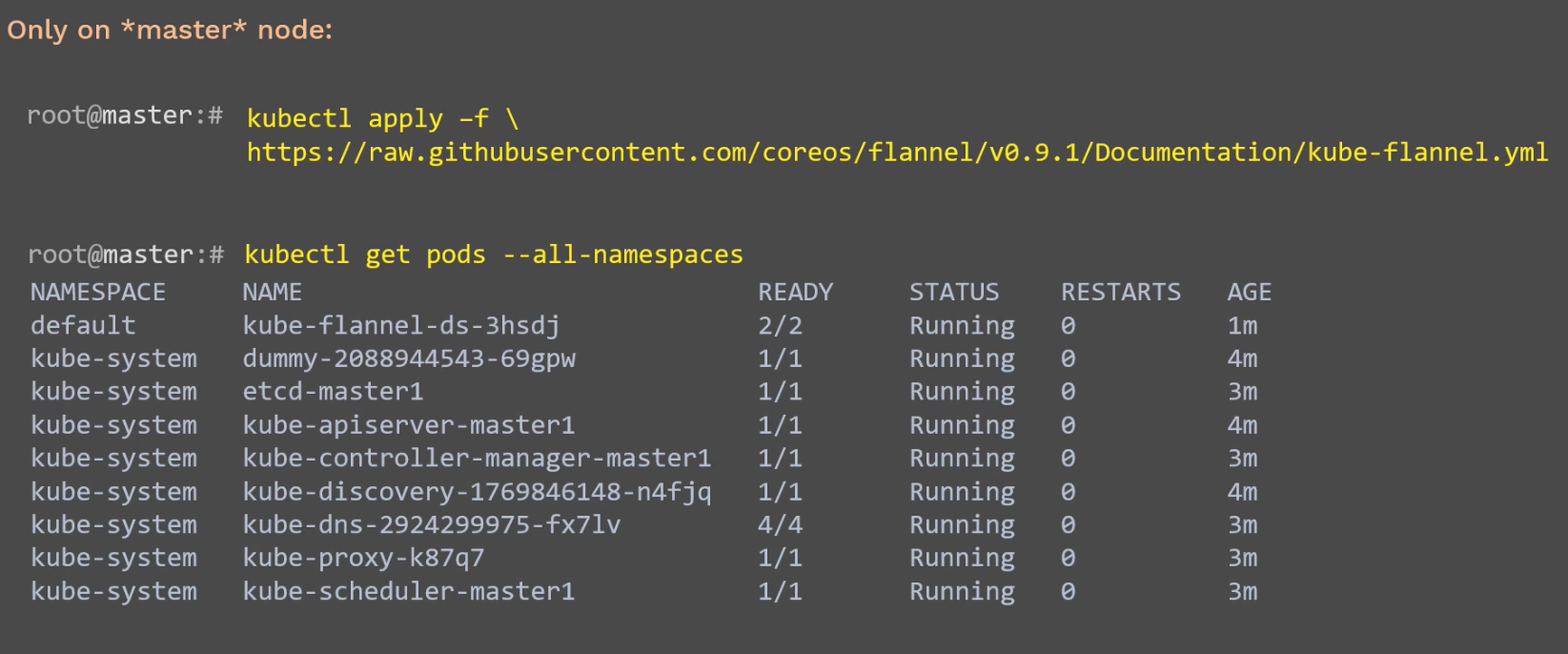

Configure the Pod network

only on master node:

root@master1:$ kubectl apply -f \ https://raw.githubusercontent.com/coreos/flannel/v0.9.1/Documentation/kube.flannel.yml

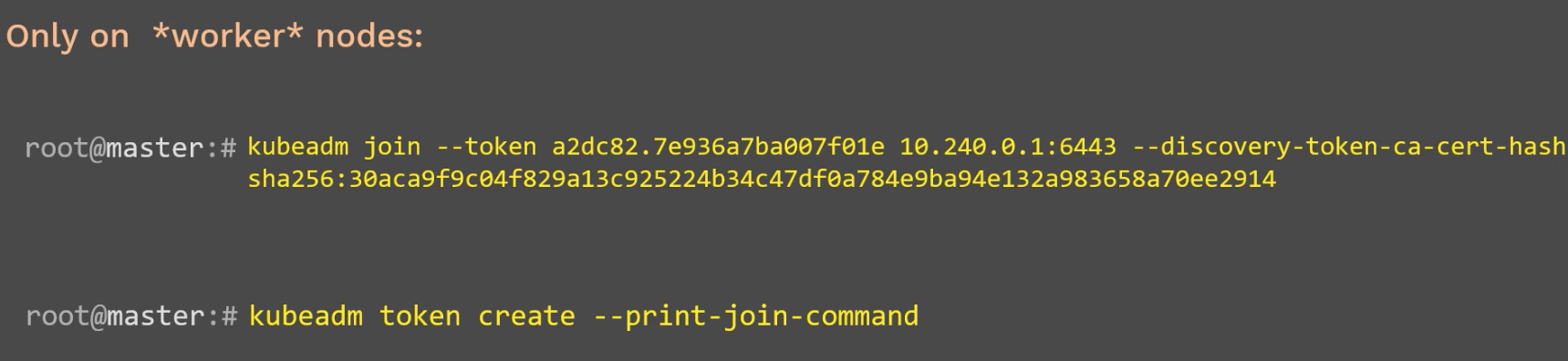

Join worker nodes to the cluster

Only on worker nodes:

root@master1:$ kubeadm join --token <token id> 10.240.0.1:6443 --discovery-token-ca-cert-hash <sha256>

root@master1:$ kubeadm token create --print-join-command

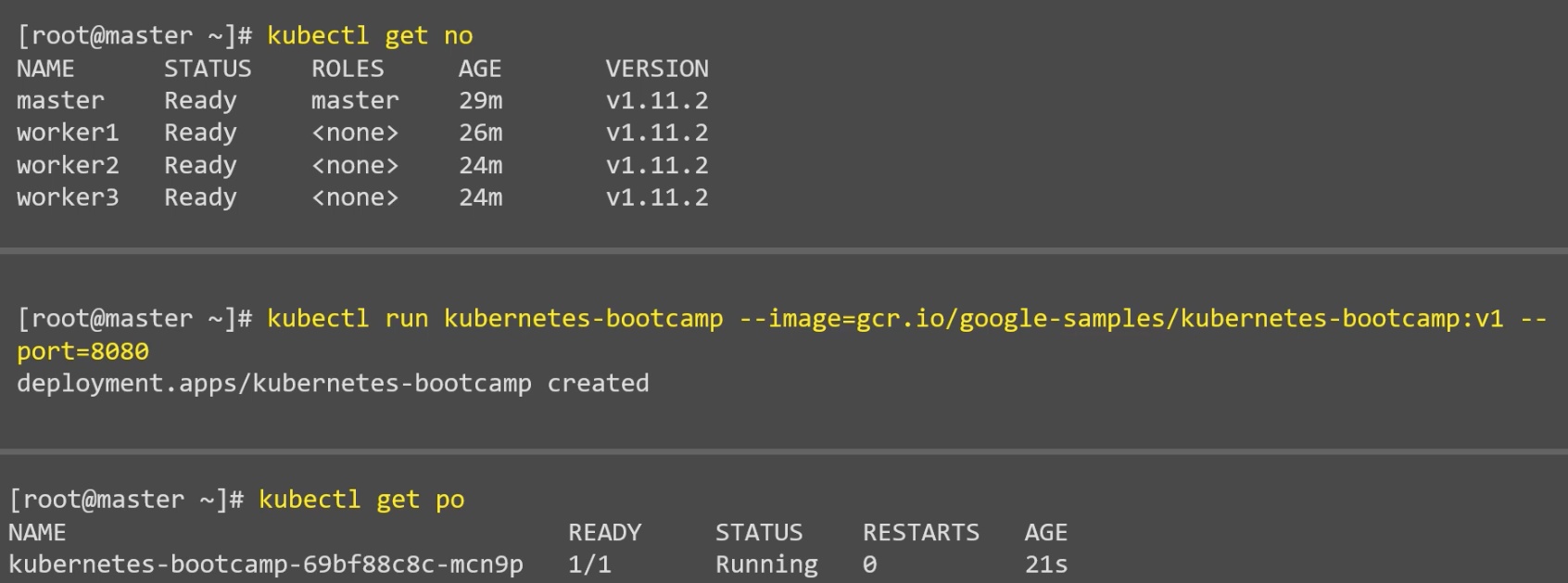

Verifications

root@master1:$ kubectl get no

root@master1:$ kubectl run kubernetes-bootcamp --image=gcr.io/google-samples/kubernetes-bootcamp:v1 --port=8080

root@master1:$ kubectl get po